The NUS Guangzhou Research Translation and Innovation Institute is committed to building a platform for interdisciplinary and cross-domain scientific research exchange, promoting the effective transformation and application of research achievements. We will regularly share cutting-edge research results and updates in this section. We welcome your engagement and discussions!

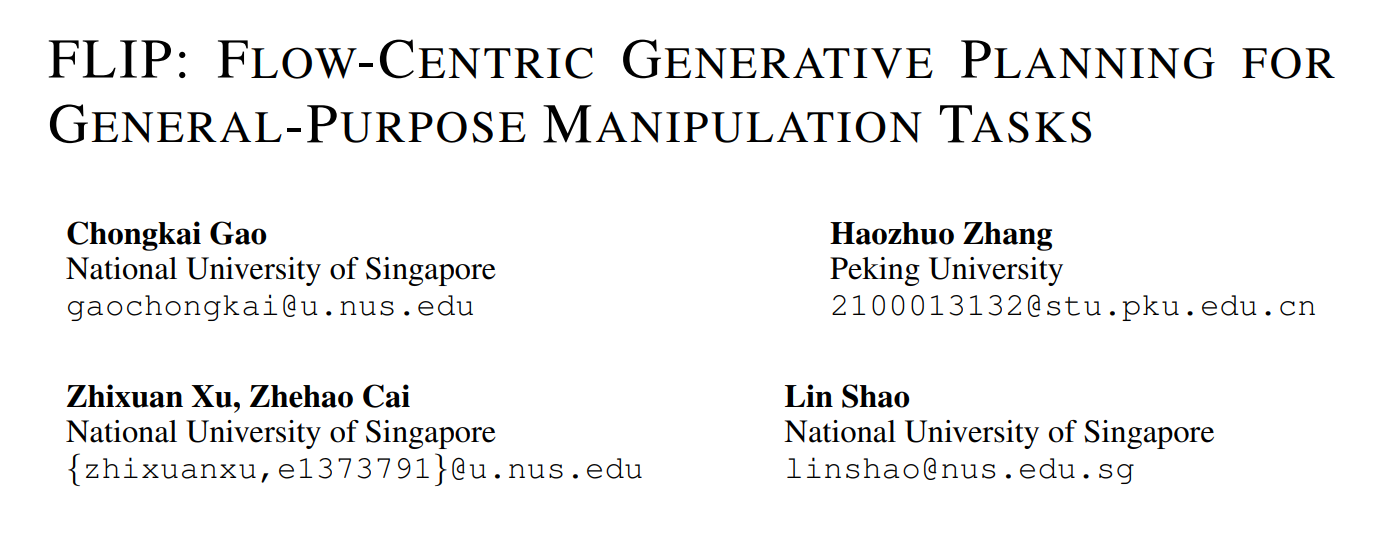

All authors of this paper are affiliated with LinS Lab at the National University of Singapore (NUS). The first author is Chongkai Gao, a Ph.D. candidate at NUS. The other authors include Haozhuo Zhang, an intern from Peking University; Zhixuan Xu, a Ph.D. candidate at NUS; and Zhehao Cai, a master's student at NUS. The corresponding author is Lin Shao, Assistant Professor at NUS. Professor Shao is also a Ph.D. supervisor at the NUS Guangzhou Research Translation and Innovation Institute (NUS GRTII).

Humans possess a general capability to plan and solve complex, long-horizon tasks, which proves invaluable when handling intricate operations in daily life. This capability can be described as follows: when faced with a task, humans first consider possible current actions, predict potential outcomes through imaginative simulation, and finally evaluate these outcomes using common sense to select the optimal action for task completion. Such a world-model-based search algorithm forms the foundational ability that allows humans to tackle open-world manipulation tasks. At the core of this ability is the human brain's construction of a "world model" of the physical environment, coupled with a general-purpose value function. Together, these components grant us the capacity to imagine and plan future states of objects and scenarios. Can robots similarly acquire this understanding and imaginative capability about the physical world, enabling them to plan future steps before task execution?

In recent years, robotics technology has advanced rapidly, leading to the emergence of an increasing number of intelligent robots. However, compared to humans, current robots still struggle significantly when handling complex, multi-stage tasks. They typically rely on task-specific data and predefined instructions or utilize large models predominantly for planning simple grasping tasks. Consequently, robots lack the human-like flexibility necessary for effectively planning and executing general, complex manipulation tasks. A central challenge in robotics research is developing a human-like "world model" that enables generalized task planning capabilities.

Recently, Professor Lin Shao's team from the National University of Singapore (NUS) introduced FLIP: a video-space task search and planning framework based on world models. This framework is applicable to general robotic manipulation tasks, including manipulation of deformable objects and dexterous hand tasks. FLIP performs task planning directly within the robot's visual space, utilizing specially designed modules for action proposal, dynamics prediction, and value function estimation within a world-model-based planning approach. The framework also features scalability in terms of model parameters. The work has been published at ICLR 2025 and was selected as an Oral Presentation at the CoRL 2024 LEAP Workshop.

· Paper Title:FLIP : Flow-Centric Generative Planning for General-Purpose Manipulation Tasks

· Webpage:https://nus-lins-lab.github.io/flipweb/

· Arxiv:https://arxiv.org/abs/2412.08261

· Code:https://github.com/HeegerGao/FLIP

· Introduction

World Models refer to learned methods designed to simulate or represent an environment. Using a world model, an agent can internally imagine, reason, and plan, thereby performing tasks more safely and efficiently. Recent advancements in generative models, particularly in video generation, have demonstrated the potential of generating high-quality videos from internet-scale training data as world simulators. World models have opened new avenues in various fields, particularly robotic manipulation tasks, which are the primary focus of this paper.

The intelligence of general-purpose robots can be broadly categorized into two levels: firstly, high-level abstract planning based on multimodal input, and secondly, concrete task execution through interaction with the real-world environment. A well-designed world model effectively supports the first capability, enabling model-based planning. Such a model must be interactive, capable of simulating environmental states based on given actions. Central to this framework is the identification of a general and scalable action representation connecting high-level planning with low-level execution. This action representation must fulfill two requirements: it must describe diverse motions involving different objects, robots, and tasks, and it should be easy to collect large-scale training data for scalability. Existing approaches often rely on linguistic descriptions as high-level actions or directly use low-level robot actions for interaction with world models. However, these methods have limitations, such as requiring additional data or annotation processes or failing to capture fine-grained actions like precise manipulations by dexterous hands. These constraints motivate us to explore more effective action representations. Furthermore, existing world models lack appropriate value functions for outcome evaluation, restricting future planning primarily to greedy search methods, thus limiting true task-space exploration.

Image flow is a dynamic representation describing pixel-level temporal changes in images. It can universally and succinctly represent diverse movements of robots and objects, offering greater precision and accuracy than language-based descriptions. Additionally, image flow can be directly obtained from video data using existing video-tracking tools. Existing research has also shown that image flow is highly effective for training low-level manipulation policies, making it an ideal action representation for world models. However, how to utilize image flow effectively for robot manipulation task planning remains unexplored.

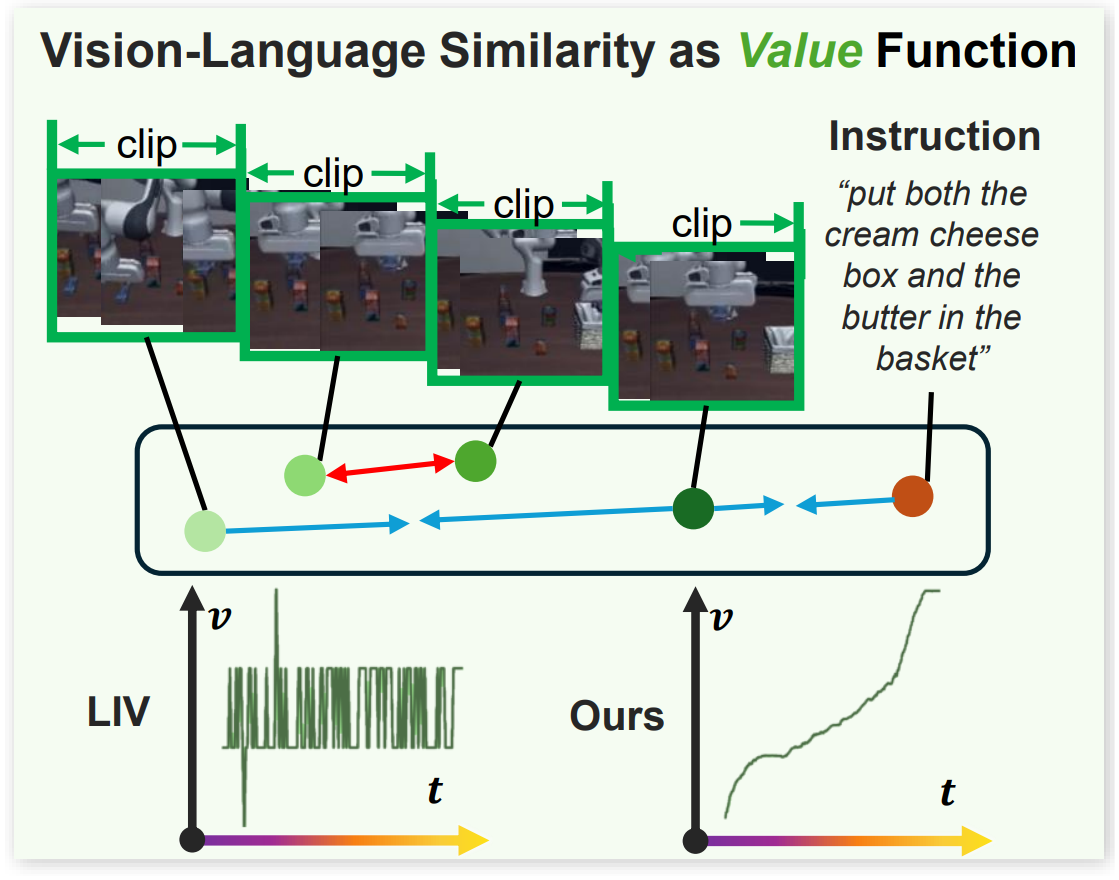

In this paper, we propose FLIP (Flow-Centric Generative Planning), a general-purpose robot manipulation planning method centered around image flow. Specifically, we train an image-flow-centric world model from video data annotated with linguistic descriptions. This world model consists of three modules: an action generation network producing image flows, a dynamics model generating videos from these flows, and a value model conducting visual-language evaluations. We design a novel training method integrating these modules to achieve model-based planning: given an initial image and task goals, the action module generates multiple image flow proposals, the dynamics model predicts short-term video outcomes, and the value module evaluates these outcomes to guide long-term planning via tree search.

Experimental results demonstrate that FLIP effectively solves various robot manipulation tasks in both simulated and real-world environments, such as cloth folding and unfolding, while generating high-quality long-term video predictions. These image flow and video plans can further guide low-level policy training. Additionally, we demonstrate that each module of FLIP outperforms existing methods. Further experiments confirm FLIP's ability to simulate complex robot manipulation tasks effectively, exhibiting strong interactivity, zero-shot transfer, and scalability.

The main contributions of this paper are:

• The proposal of FLIP, an image-flow-centric general-purpose robot manipulation planning method, realizing an interactive world model.

• The design of an image flow generation network, a flow-conditioned video generation network, and a novel visual-language representation model training method as core components of FLIP.

• Experimental validation showcasing the generality and superiority of FLIP across various tasks, highlighting its outstanding long-term planning capabilities, video generation quality, and ability to guide policy training.

Figure 1 The FLIP framework.

2. Three Key Modules of FLIP

We model robotic manipulation tasks as Markov Decision Processes (MDPs) and aim to address these tasks by learning both a world model and a low-level policy. The world model performs model-based planning in the spaces of images and image flows to maximize cumulative rewards, synthesizing long-horizon plans, while the low-level policy is responsible for executing these plans in real-world environments. Our approach plans to train the world model exclusively on video datasets annotated with linguistic instructions, ensuring generality and scalability. Meanwhile, the low-level policy will be trained using a smaller dataset annotated with explicit actions. To achieve model-based planning, our world model comprises three key modules, which we detail in the subsequent sections.

2.1 Image Flow Generation as a General Action Module

The action module of FLIP is an image flow generation network that produces image flows (i.e., trajectories of query points over future time steps) to serve as actions in planning. We adopt a generative model rather than a predictive model because, during model-based planning, the action module must provide multiple diverse action candidates for sample-based planning methods. Specifically, given a history of observed images up to time step t, a linguistic goal, and a set of two-dimensional query points, the image flow generation network predicts the coordinates of these query points over the subsequent L time steps (including the current one).

One crucial issue involves training data annotation. Query point image flows can be directly extracted from raw video data using existing video-point tracking models such as CoTracker. However, selecting appropriate query points remains challenging. Previous methods either rely on automatic segmentation models to choose points within regions of interest or sample points according to predefined ratios in dynamic and static areas. These approaches present two primary limitations: modern segmentation models (e.g., SAM) struggle to accurately segment complex scenes, and in long-duration videos, objects may enter or leave the frame, rendering the initial frame's query points inadequate. To address these challenges, we uniformly sample dense grid query points across each entire image at every time step, overcoming segmentation inaccuracies. Additionally, we track only short-duration video segments starting from each frame of a long video sequence, mitigating issues caused by objects entering or exiting frames. Thus, even if objects enter or exit, their impact is restricted to short-term segments. Specifically, for every frame in the dataset, we uniformly sample candidate grid points and employ the CoTracker tool to generate image flows for future L-step video segments.

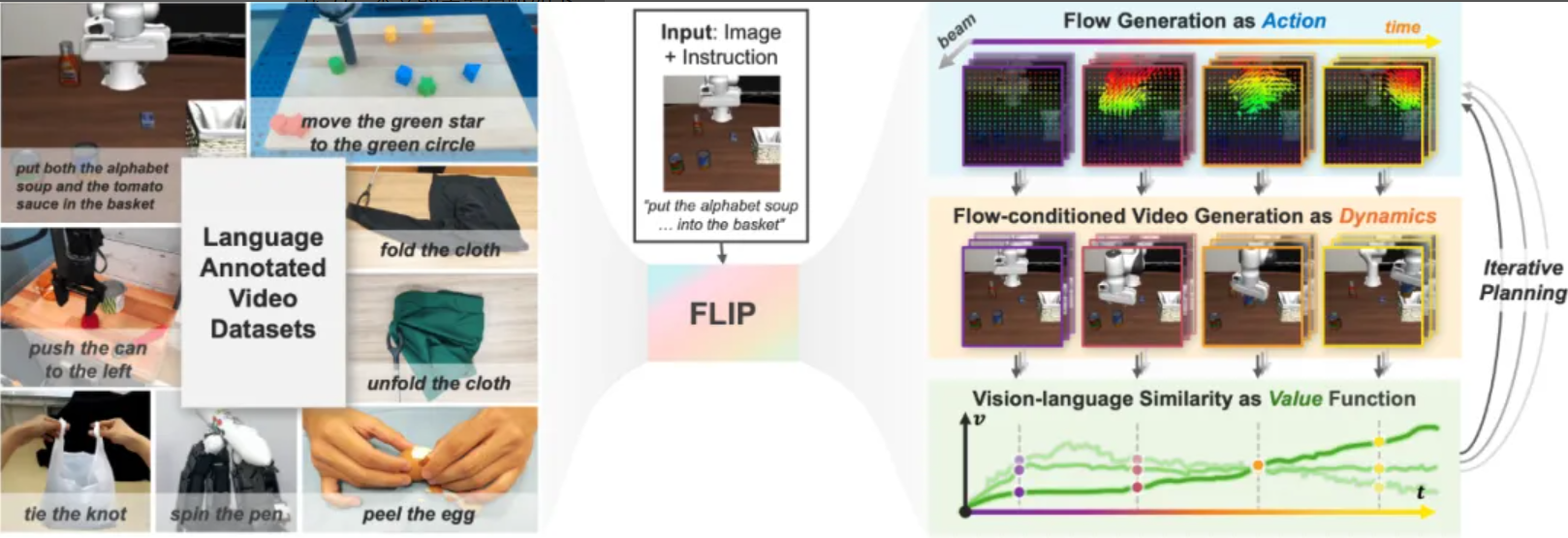

As depicted in Figure 2, we designed a Transformer-based conditional Variational Autoencoder (VAE) for image flow generation. Unlike previous approaches predicting absolute coordinates, we found that predicting relative displacements yields superior results. In the VAE encoder, we encode real image flows, converting observation histories into image patches. Language embeddings are produced using the Llama language model and converted into tokens, which are concatenated with a CLS token for information aggregation and input into the Transformer encoder. The output at the CLS position serves as the latent variable for the VAE. In the decoder, we first encode query points at the current time step t into query tokens, concatenating them with image and language tokens, and the latent variable z sampled via reparameterization. These are input into another Transformer encoder, and the outputs at the query token positions pass through two Multi-Layer Perceptrons (MLPs) to predict displacement magnitudes and directions for the next L steps, reconstructing the complete future image flow incrementally. Simultaneously, we perform an auxiliary image reconstruction task from the outputs at the image token positions, which has proven beneficial for improving model training accuracy.

Figure 2 Action module and Dynamics module.

2.2 Image-Flow Conditioned Video Generation Model as the Dynamics Module

Our second module is a dynamics module, an image-flow conditioned video generation network, designed to generate subsequent video frames based on the current image observation history, linguistic goals, and predicted image flows. This facilitates iterative planning for the next steps. We developed a novel latent-space video diffusion model capable of effectively incorporating multimodal conditions such as images, image flows, and language. This model builds upon the DiT architecture and integrates spatial-temporal attention mechanisms. Here, we emphasize our multimodal condition handling mechanism. In traditional DiT and previous trajectory-conditioned video diffusion models, Adaptive Layer Normalization Zero (AdaLN-Zero) is commonly employed for condition processing (e.g., diffusion steps and class labels), which compresses all conditional information into scalars and lacks fine-grained interaction with complex conditions like images and image flows. To address this issue, we propose a hybrid condition processing mechanism tailored for multimodal conditional generation.

Specifically, we utilize a cross-attention mechanism enabling fine-grained interaction between image-flow conditions (represented as target point tokens) and the observation conditions alongside noisy frames. Historical image observations are directly concatenated with Gaussian noise frames. Additionally, we continue employing the AdaLN-Zero mechanism for global conditions such as diffusion steps and language instructions to guide the diffusion process comprehensively. To maintain clarity in observation conditions, we neither add noise to historical observations nor perform denoising on them during the diffusion process.

2.3 Visual-Language Representation Learning as the Value Function Module

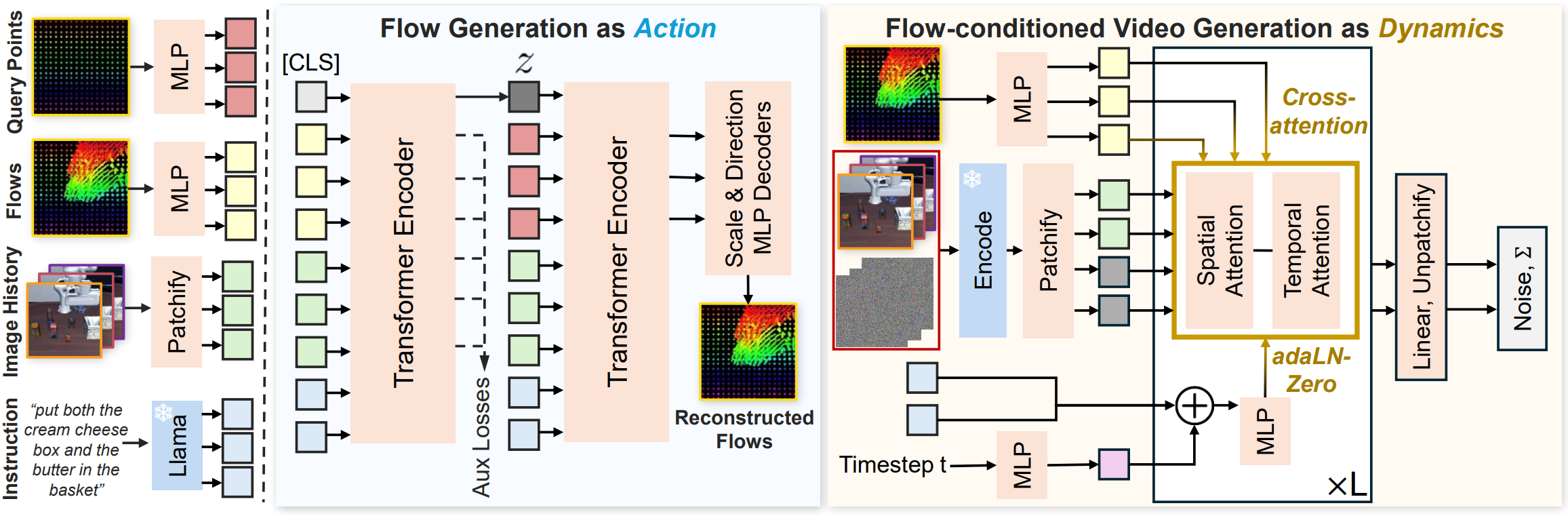

The value module of FLIP evaluates current images based on linguistic goals to produce a value function estimate V̂t for model-based planning in image space: V̂t = V(ot, g). In this study, we utilize the LIV model as the value function. LIV initially learns a shared visual-language representation from action-free videos annotated with language and subsequently computes the value based on similarity between current images and goal language descriptions. Specifically, LIV measures the value through a weighted cosine similarity between image and language embeddings. The pre-trained LIV model requires fine-tuning on new tasks to yield effective value representations. The original fine-tuning losses include image loss and language-image loss; the former increases similarity between starting and ending frames via temporal contrastive learning, maintaining a (discounted) fixed embedding distance between adjacent frames, while the latter encourages greater similarity between goal images and goal languages.

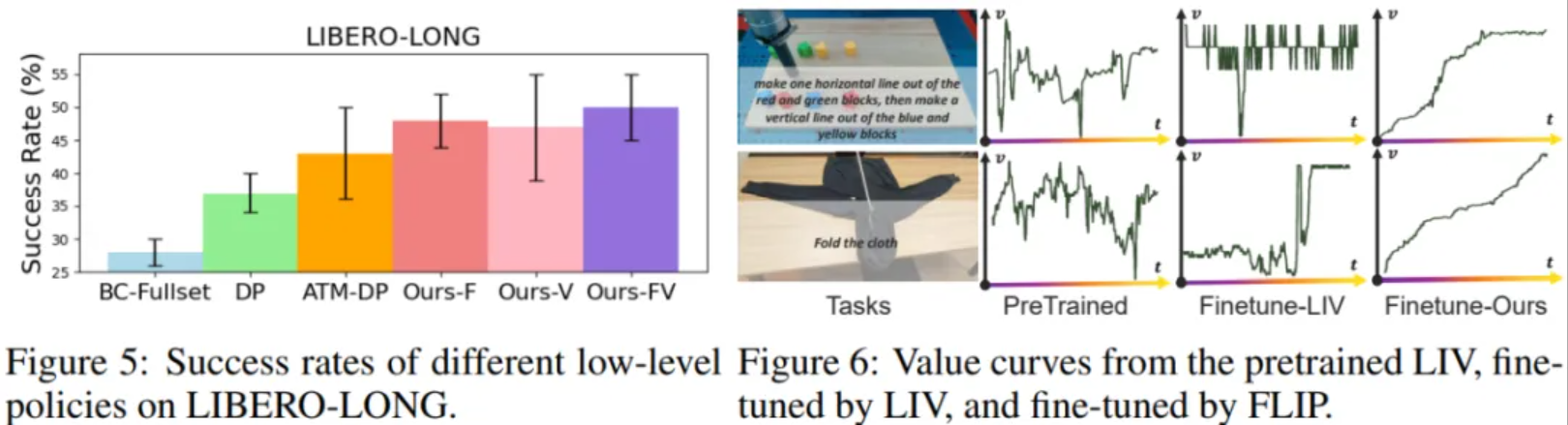

However, we observed that the original fine-tuning method performs poorly with long-duration and imperfect video data, leading to substantial fluctuations in the fine-tuned value curves. Such fluctuations adversely affect sampling-based planning algorithms, which typically expect smooth value curves. For example, during planning, robotic arms might pause or hesitate, causing instability in task performance. To mitigate this issue, we replace the concept of "adjacent frames" with "adjacent states" in the original loss function, defining states as short-duration video segments. Specifically, we segment long videos into multiple fixed-length segments, treating each as the minimal unit of the video. This adjustment effectively smooths the value curve, significantly enhancing the smoothness of value evaluations during planning, as illustrated in Figure 3.

Figure 3 Value function module.

3. Flow-based World Model Planning Algorithm

3.1 Model-based Planning with Image Flows, Video, and Value Functions

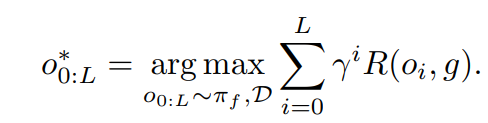

Generating long-horizon videos in an autoregressive manner typically lacks sufficient accuracy. Therefore, we adopt a model-based planning approach utilizing the image flow action module and video generation module to plan future video frames by maximizing cumulative discounted returns. Formally, this planning process can be represented as follows:

According to the Bellman equation, this is equivalent to selecting, at each step, the next state that maximizes the sum of immediate rewards and the value of future states. Our reward mechanism is designed to encourage finding the shortest possible planning path. We use a Hill Climbing method to solve this planning problem, which involves initializing B planning beams. At each time step t, the action module generates multiple candidate image-flow actions based on the current observation history and language goal; the dynamics module then generates several short-term future video segments based on these image flows. Subsequently, the value module evaluates these generated videos, selecting the top A videos with the highest rewards for the next iteration. To prevent over-reliance on anomalous states during planning, we periodically replace beams with the lowest value with those having the highest value. The algorithm is summarized in Figure 4.

3.2 Implementation of the Low-level Policy

The low-level policy of FLIP is responsible for executing the planned actions. Given the current image history, language goal, image-flow actions, and short-term video segments generated by the video generation module, this policy predicts the specific low-level robotic actions, enabling the robot to perform tasks in the real-world environment. We trained multiple policies, each conditioned on different types of input information, using only a small amount of demonstration data.

Figure 4 World-model based planning algorithm.

4. Experimental Results

4.1 Model-based planning results on robot manipulation tasks

In this section, we first demonstrate that FLIP can: 1) perform model-based planning across various robotic manipulation tasks; 2) synthesize long-horizon videos (≥ 200 frames); and 3) guide low-level policies in executing tasks in both simulated and real-world environments. We separately evaluate the action module, dynamics module, and value module, showcasing FLIP’s interactivity, zero-shot transfer capability, and scalability.

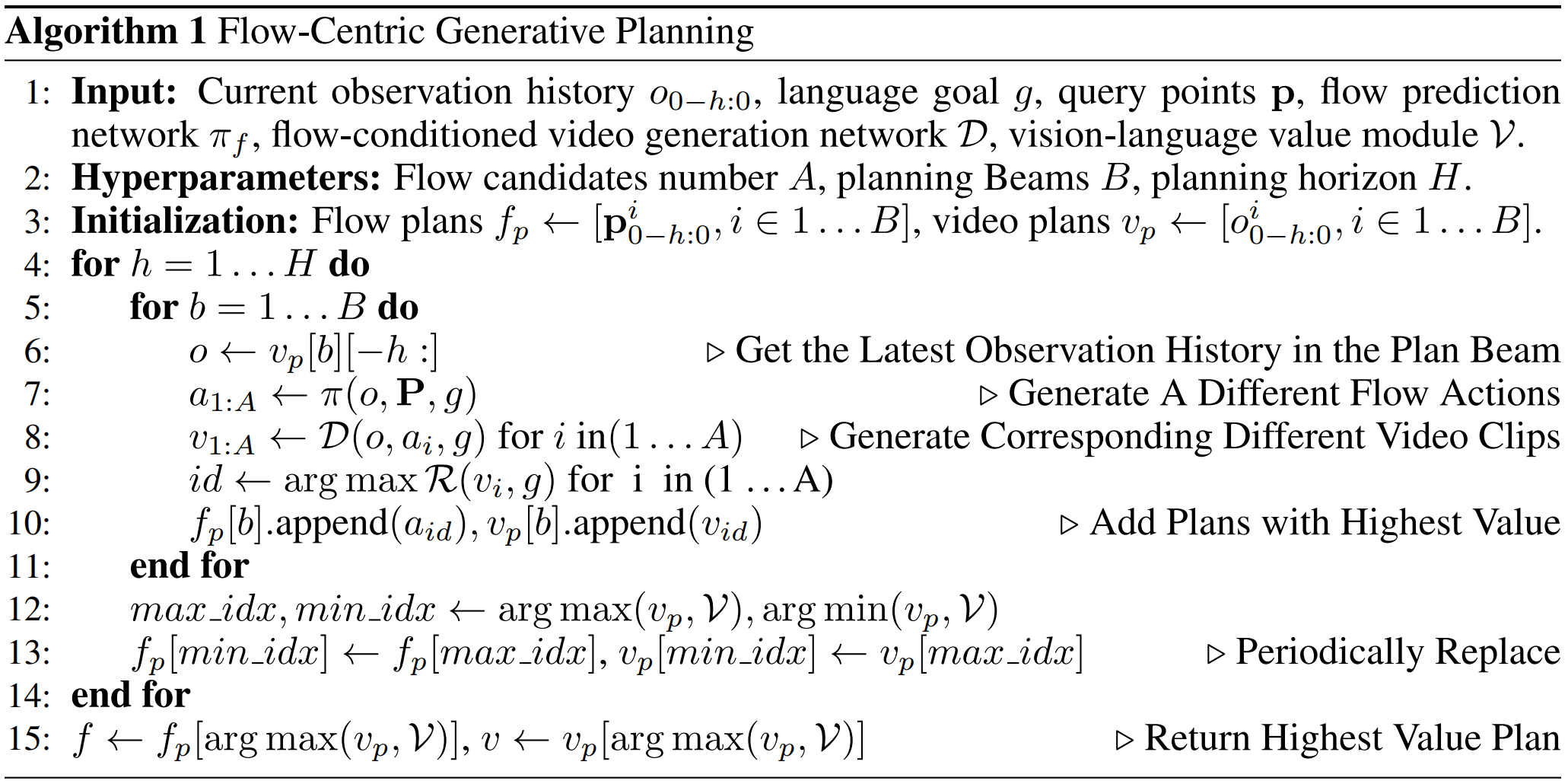

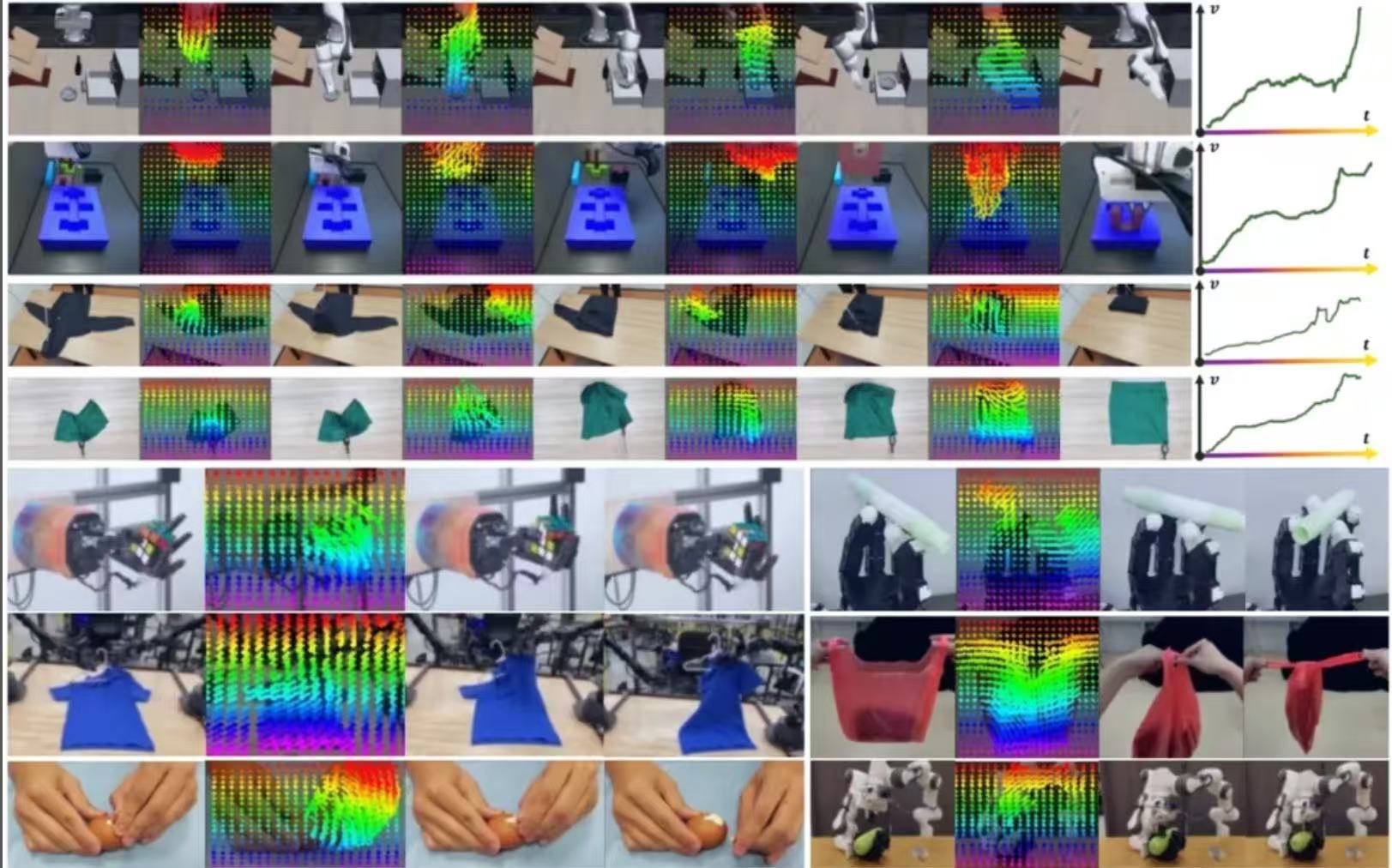

Experimental Setup. We use four benchmarks to evaluate FLIP's planning capability. The model takes an initial image and language instruction as inputs, searching within the image-flow and video spaces to synthesize task plans. The first benchmark is LIBERO-LONG, a simulated benchmark comprising 10 long-horizon desktop manipulation tasks, where we train with 50×10 videos of resolution 128×128×3 and test on 50×10 new random initializations. The second benchmark is FMB, involving object manipulation and assembly tasks; training is conducted on 1,000 single-object multi-stage videos and 100 multi-object multi-stage videos (128×128×3 resolution), tested on 50 new initializations. The third and fourth benchmarks involve cloth folding and unfolding tasks, trained with 40 videos each from different viewpoints and tested on 10 new viewpoints (resolution 96×128×3). Evaluation is performed by manually verifying if the generated videos successfully complete the tasks. We compare our approach with two baseline methods: 1) UniPi, a text-based video generation approach; and 2) FLIP-NV, the FLIP version without the value module.

Results. As shown in Figure 5, UniPi exhibits lower success rates, indicating the difficulty of directly generating long videos. FLIP-NV outperforms UniPi, suggesting that image flows effectively guide video generation. FLIP surpasses all baselines, demonstrating the importance of the value module for model-based planning.

4.2 Long-Horizon Video Generation Evaluation

Experimental Setup. In this section, we quantitatively assess the quality of long-horizon videos generated by FLIP and compare them with other video generation models. We use benchmarks including LIBERO-LONG, FMB, cloth folding/unfolding, and Bridge-V2 for evaluation, with videos typically exceeding 200 frames (except for Bridge-V2). The selected baseline methods are LVDM (an advanced text-to-video generation method) and IRASim (a video generation method conditioned on robot arm trajectories). Evaluation metrics include latent-space L2 distance, pixel-space PSNR, and FVD video quality measures.

Figure 5 Quantitative experiment results.

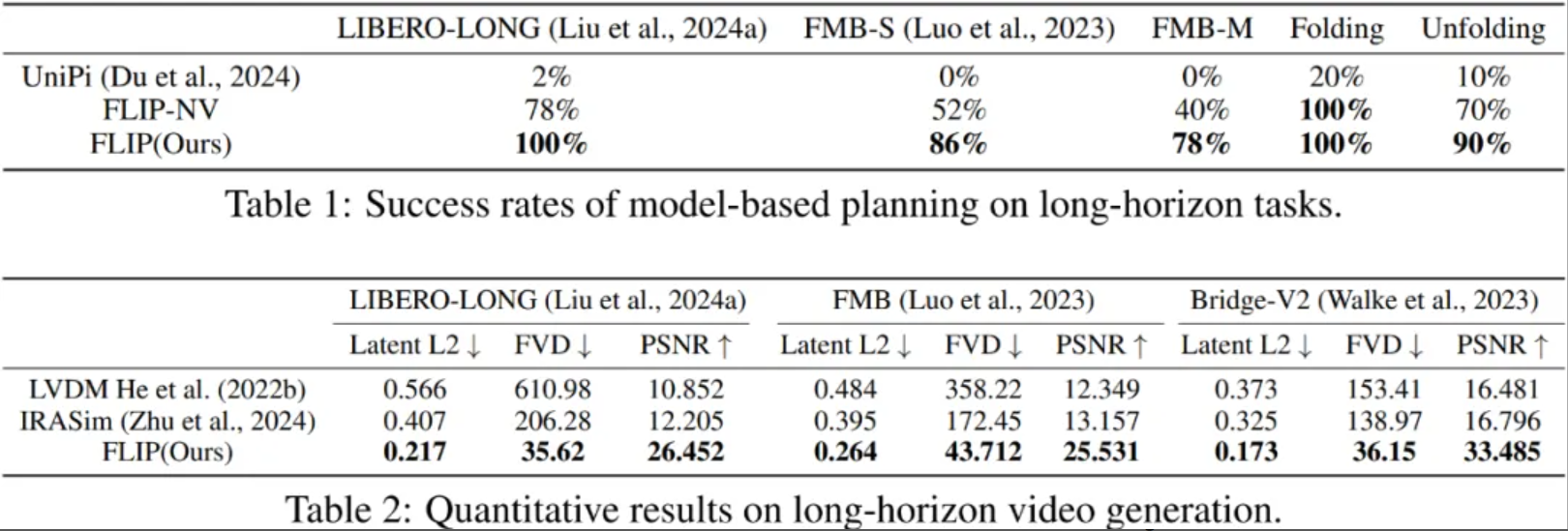

The results are presented in Figure 5. FLIP outperforms baseline methods across all datasets. LVDM performs relatively well on the shorter Bridge-V2 benchmark but struggles on long-video benchmarks such as LIBERO-LONG and FMB. IRASim shows improvements over LVDM, highlighting the importance of trajectory guidance. However, due to its autoregressive generation approach, it still falls short compared to FLIP, which synthesizes high-quality videos by leveraging model-based planning and stitching shorter video segments. The generally weaker performance on the FMB benchmark can be attributed to instantaneous jumps frequently present in the training videos; FLIP partially mitigates this issue through its reliance on historical observations. Additionally, we qualitatively demonstrate FLIP's applicability in complex long-horizon tasks, including ALOHA, pen spinning, robot medication retrieval, tying plastic bags, and human egg peeling tasks, as illustrated in Figure 6.

Figure 6 Results of model based planning for manipulation tasks.

Figure 6 Results of model based planning for manipulation tasks.

4.3 Plan-Guided Low-Level Policy Experiments

Experimental Setup. In this section, we investigate how generated image flows and video plans can serve as conditions to train manipulation policies for task execution. The primary question is determining whether image flows, videos, or a combination of both are more suitable for guiding policy learning. We evaluate using the LIBERO-LONG benchmark, training each task with 10 action-annotated videos and 50 action-free videos. During inference, FLIP acts as a closed-loop policy, re-planning after each execution segment. We compare our approach against ATM and its diffusion policy variant, as well as OpenVLA (zero-shot and fine-tuned versions).

Results Analysis. As shown in Figure 7, our proposed planning-guided policies achieve higher success rates compared to diffusion policies and ATM-DP, indicating that dense image flow information and high-quality future videos as conditions are superior to sparse image flow information alone. The policy guided by both image flows and videos (Ours-FV) achieves the best performance, suggesting that combining image flows and videos as conditional inputs significantly enhances policy success rates. Additionally, policies guided solely by videos (Ours-V) perform reasonably well but show considerable performance fluctuations when the robot deviates from training trajectories, reducing video generation quality. Incorporating image flows as additional conditions notably reduces the variance in success rates, highlighting the stability provided by image flow predictions.

Figure 7 Success rates of the low-level models based on image flows, and effectiveness of FLIP's value function module.

4. Experimental Validation of FLIP's Fundamental Properties

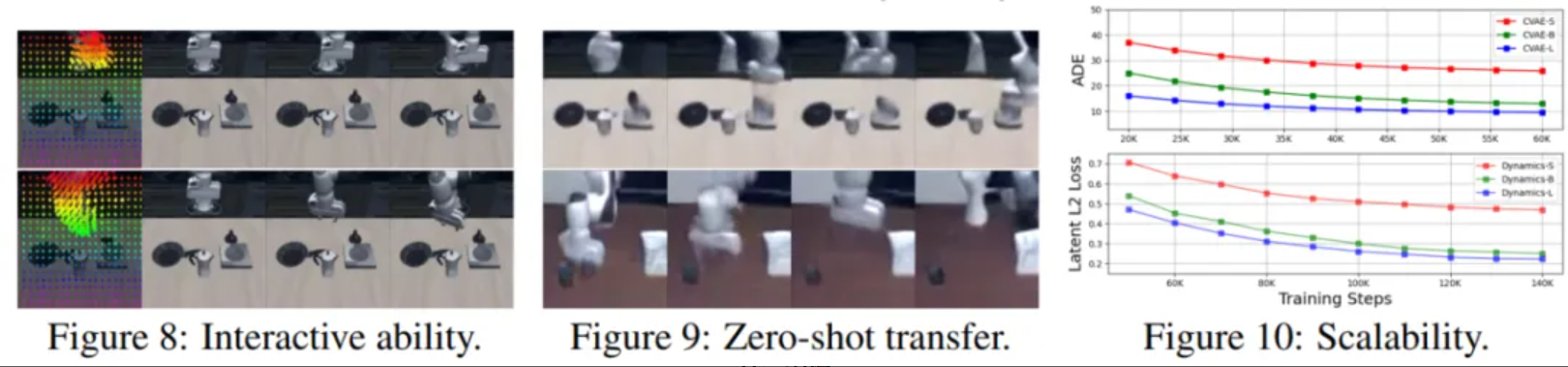

To demonstrate several key characteristics of FLIP, additional experiments were conducted on benchmarks such as LIBERO-LONG, with results illustrated in Figure 8.

Interactive World Model Capability. We validated the interactivity of the trained dynamics module, demonstrating its ability to generate corresponding videos based on user-specified image flows. Experiments confirm that the module accurately responds to custom-defined image flows by generating matching video outputs.

Zero-Shot Transfer Capability. We showed that the pre-trained FLIP model effectively handles unseen tasks without additional fine-tuning, successfully generating natural robotic actions, thereby highlighting FLIP's capability for knowledge transfer.

Scalability. By training on large-scale video datasets, FLIP exhibited strong scalability. Even when handling numerous complex tasks and extensive video data, the model maintained robust performance in planning and video generation.

Figure 8 Three properties of FLIP.

5. Conclusion

In this research, we proposed FLIP, a general-purpose robotic manipulation task generation and planning method centered on image flows. FLIP achieves generalized planning across diverse manipulation tasks via image flow and video generation. Despite FLIP's outstanding performance, certain limitations remain: firstly, the relatively slow planning speed due to extensive video generation processes required during planning limits the method's application to quasi-static manipulation tasks. Secondly, FLIP currently does not utilize the physical attributes and three-dimensional information of scenes. Future research may consider developing world models that integrate physical properties and 3D scene information, thereby further broadening FLIP's applicability.

The NUS Guangzhou Research Translation and Innovation Institute primarily focuses on seven key areas: smart city, information and communication, electronic science and technology, advanced manufacturing, artificial intelligence, biological sciences, and financial technology. Relying on NUS’ world-class research capabilities, the Institute has engaged dozens of professors from NUS as academic leaders. These leaders guide local research teams in promoting scientific research, research translation, and postdoctoral training programs.

We look forward to working with global research experts, entrepreneurs, and policymakers to stimulate innovative thinking, explore the infinite possibilities of scientific research, and establish an interdisciplinary and cross-field ecosystem for scientific research and innovative applications.