The NUS Guangzhou Research Translation and Innovation Institute (NUS GRTII) is committed to building a platform for interdisciplinary and cross-domain scientific research exchange, promoting the effective transformation and application of research achievements. We will regularly share cutting-edge research results and updates in this section. We welcome your engagement and discussions!

The research introduced in this article originates from the team led by Professor Shuzhi Same GE from the Department of Electrical and Computer Engineering at the National University of Singapore. Professor Ge also serves as the director of NUS GRTII and Fellow of the Academy of Engineering Singapore.

Professor Ge's team stood out in the Robust AI Grand Challenge hosted by AI Singapore (AISG) with this research, "Development of Stable Robust and Secure Intelligent Systems for Autonomous Vehicles," successfully securing first-stage funding of up to $4 million.

1. Project name

Development of Stable, Robust and Secure Intelligent Systems for Autonomous Vehicles

2. Project leader introduction

Shuzhi Sam Ge, PhD, DIC, BSc, PEng

Professor, Department of Electrical and Computer Engineering, National University of Singapore

Director, NUS Guangzhou Research Translation and Innovation Institute

Fellow of the Academy of Engineering Singapore, IEEE, IFAC, and IET

Shuzhi Sam Ge is a Full Professor, at Department of Electrical and Computer Engineering, the National University of Singapore (NUS) and Director of NUS GRTII. He received his B.E. from Beijing University of Aeronautics and Astronautics in 1986 and his Ph.D. and DIC from Imperial College London in 1993. A globally recognized expert in intelligent control, robotics and artificial intelligence, he has been named a Clarivate Highly Cited Researcher annually since 2016. Professor Ge has led numerous major research initiatives and technology transfer platforms, with significant impact in advanced manufacturing, intelligent robotics, unmanned systems, and stable artificial intelligence. He serves as Editor-in-Chief, Senior Editor or Associate Editor of leading journals and serves as President, Vice President and other key roles in organizations in ACA, IEEE and IFAC.

3. Project Introduction

This project focuses on developing a multi-view, multi-modal 3D perception system that integrates Bird’s Eye View (BEV) representation with Transformer architectures to enhance the stability, robustness, and security of autonomous driving systems in complex physical environments.

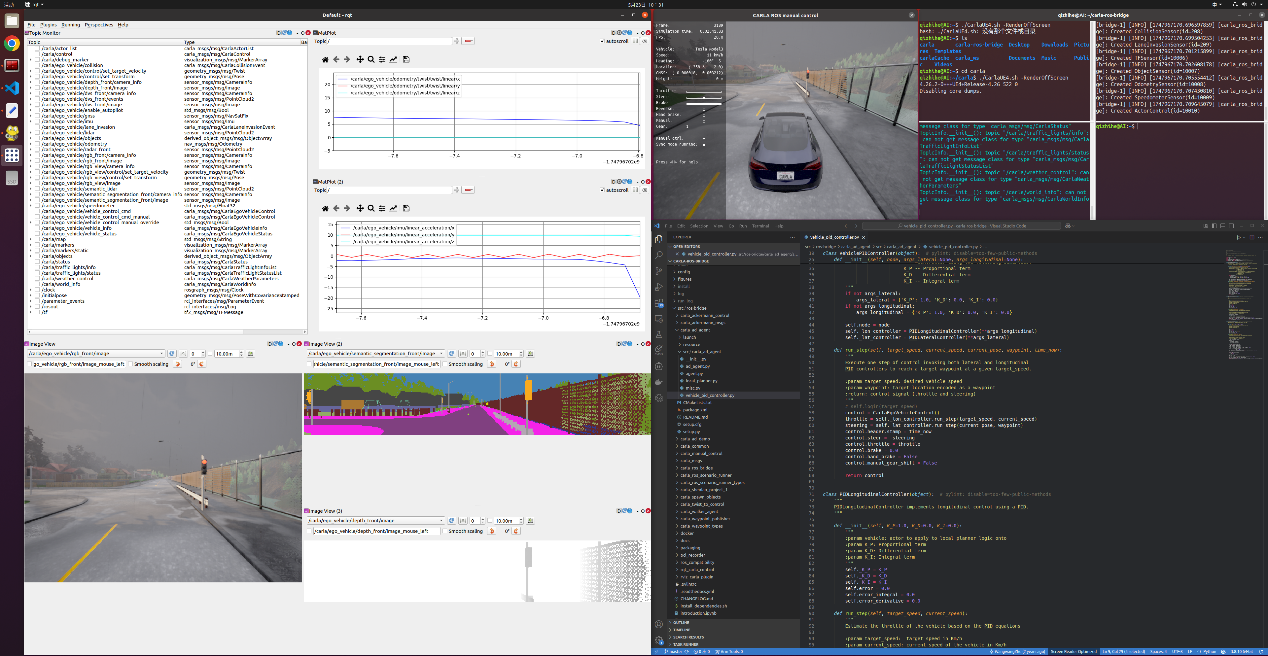

By unifying data from multiple cameras, LiDAR, and radar sensors into the BEV space, the system enables efficient 3D object detection and environmental mapping.

Key objectives include:

(1) Designing BEV perception networks capable of spatiotemporal feature fusion to improve understanding of dynamic scenes;

(2) Developing collaborative adversarial attack and defense mechanisms tailored for multi-modal inputs to enhance robustness in the physical world;

(3) Establishing an evaluation platform that combines digital twin simulations with real-world testing to systematically validate the performance of perception models.

This system aims to provide robust AI support for safety-critical applications like autonomous driving, advancing the field of multi-modal 3D perception.

4. Highlights of the project

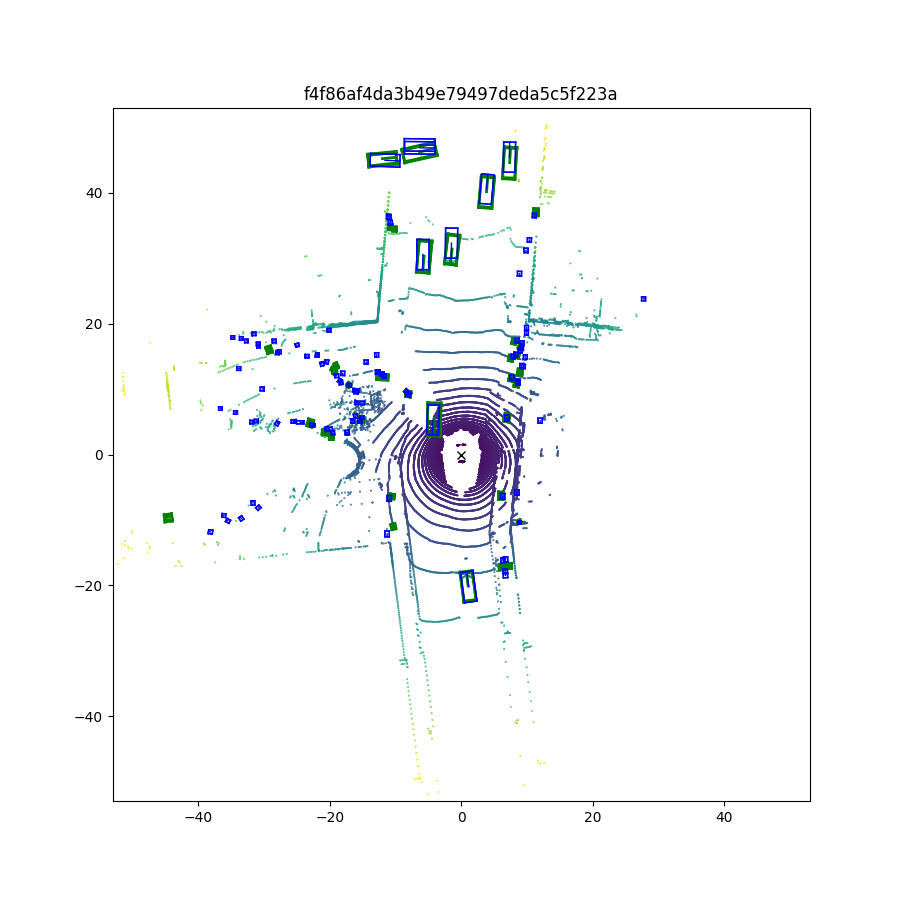

(1) Multi-View Image-Based Bird’s-Eye View 3D Detection

Multi-View 3D Object Detection Based on Bird’s-Eye View Representation

(a) Leverages spatial priors to construct BEV queries, aggregating features from six camera views into a unified bird’s-eye-view space to enhance 3D scene understanding.

(b) Incorporates temporal self-attention to model object trajectories across frames, enabling recovery from occlusions and stable detection of dynamic objects.

(c) Enables robust modeling of both static and dynamic targets in complex urban traffic, with strong performance in occlusion handling and multi-object discrimination.

In summary, this method significantly improves spatial modeling and robustness for camera-only systems in 3D detection, making it well-suited for dense and complex traffic scenarios.

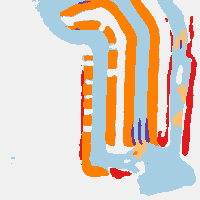

(2) Robust 3D Perception through Fusion of Image and Point Cloud Data

3D Object Detection Using Image Modality

Road Segmentation Based on BEV Features

(a) Projects image and LiDAR data into a shared BEV space, preserving both semantic richness and geometric structure to enhance multimodal feature alignment.

(b) Utilizes precomputation and efficient feature pooling to accelerate view transformation and achieve low-latency, well-aligned feature fusion.

(c) Demonstrates superior robustness and environmental adaptability under challenging conditions like rain or low light, enabling deployment in safety-critical scenarios.

In summary, this fused perception system offers strong cross-sensor compatibility and scene generalization, greatly enhancing the reliability and robustness of autonomous systems in the physical world.

We look forward to working with global research experts, entrepreneurs, and policymakers to stimulate innovative thinking, explore the infinite possibilities of scientific research, and establish an interdisciplinary and cross-field ecosystem for scientific research and innovative applications.